An essay about MapML, part 4: Thumbs-up for document accesibility

As the date for the OGC-W3C workshop on web maps looms in, I’ve been catching up with some reading on the subject.

One of those things I’ve caught up with is some beautiful accesibility analysis of current web mapping frameworks:

I must confess that I’m biased on the subject: My brain holds knowledge about HTML, CSS, DOM, GIS, WebGL and a plethora of arbitrary CompSci topics and other TLAs… but that leaves little room left for ARIA and Orca/JAWS.

However, my first ever website was hosted in one of my alma mater’s VAX/VMS mainframes - we had VT320 terminals, with a lynx WWW browser. If a website can be seen with a text-only web browser, then I consider it accessible. So at least I have modicum of criteria when it comes to determining accesibility.

With that in mind, I must say (and it pains me to say this): I don’t have much faith that a <mapml> standard will improve accessibility. Please bear with me as I explain why the reason is not technical. It starts with my own thumb:

Part 4: Thumbs-up for document accesibility

Since one of the topics for the workshop is how maps integrate with other web standards, I decided to get the dust off one of my proofs on concept, and turn the Leaflet handler demo for DeviceOrientation events, adapt it to the new shiny Sensor API. The idea is, having a phone/tablet, the user can tilt it in order to move the map around.

I changed my mind in the last minute, and instead of gyroscope support, I implemented joystick/gamepad support via the Gamepad API. It’s just 100 lines of code, and there’s a working demo (though you’ll need specific hardware, i.e. a USB gamepad):

Something that surprised me is that it feels good. There’s a certain tactility to it; with hardly any delay at all, the movement syncs up with the pressure applied and it… just feels good, feels like natural movement. And that’s taking into account that I’m using a cheap (literally 6€) knockoff gamepad with bad sensitivity and crappy deadzones on the analog thumbsticks. So I think that there has to be a use for this.

This prompted me a couple of questions:

Should hypothetical <mapml> compliant implementations support this by default? I don’t think so, since the use cases are niche.

Should they allow being extended to support this kind of joystick interaction? Maybe. Maybe there’s a consensus for “keep the interactions consistent across implementations”, maybe not.

Shall web developers ignore the standards and go out of their ways to implement extensions like this? I think the answer is yes, and inevitably so. I base that answer in my experience on how the <video> element developed over the years.

Cue the Buggles.

Interlude: <video> killed the <embed> star

I do remember the times before <video> (it’s OK to call me old). It happens that I did work with MJPG and crappy video encoding back in 2002, along with ActiveX embedded video players. Yes, ActiveX. And the VLC plugin for Mozilla. And the flash jwplayer came and provided a modicum of comfort. Those were the times.

The user experience for video back in the day, both for the user (“I have to download what and install it where?”) and the web developer (user agent sniffing plus an <object> inside an <embed> with a MJPG fallback in the form of a <img>) was quite abismal. A <video> element back then made sense.

So let’s fast-forward ten years, and see what <video> has really brought us. For me, three things spring to mind:

-

Chunked video, so a video player will perform a HTTP(S) request to load a few seconds of video through incomprehensible URLs and opaque propietary APIs, then another, then another. FSM bless youtube-dl (and its

mpvintegration).We’ll get told that the reason is adaptive streaming; I won’t buy that, since the same lobby who pushed for

<video>in the first place could have pushed to leverage the<source>element for that very same purpose, letting the browser switch sources as needed. -

DRM (in the form of EME). To say the very very least, it was highly controversial and most tech news sites (e.g. arstechnica) run news items about it. The subject is impressively inflammatory in the context of W3C proceedings, so instead of writing hundreds of words explaining why I think DRM is a horrible idea and a case of E-E-E, I’ll just quote the Wikipedia article on EME as it stands at the time of this writing:

EME has faced strong criticism from both inside and outside W3C. The major issues for criticism are implementation issues for open-source browsers, entry barriers for new browsers, lack of interoperability, concerns about security, privacy and accessibility, and possibility of legal trouble in the United States due to Chapter 12 of the DMCA.

-

Consistent UI, then inconsistent UI back again. It was magical: even though the

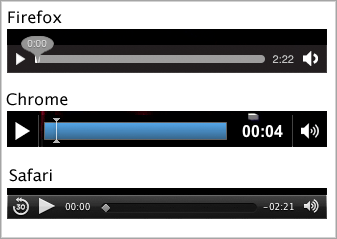

<video>spec doesn’t mandate UX, we got very similar interfaces all across the board, as illustrated in a 2010 article:

Dark grey background, white text; pause/play button, then seekbar with length indicator, then volume. As of 2020, the interfaces haven’t changes much, with a full-screen button on the right being the most noticeable difference.

But IMHO web designers are fickly creatures that don’t like tiny differences between browsers. They’re the ones who, not liking how a

<button>looks in different browsers, replace them with Bootstrap’s<span class='button'>s. They push for a per-website, pan-browser consistent UI instead of a per-browser, pan-website consistent UI.So today, things have diverged, and each site has its own controls… take youtube:

Youtube embedded:

Twitch, with a button for the chat sidebar:

Vimeo:

DailyMotion, with the play/pause button in the middle:

RTVE is orange with a place for a “LIVE” label:

NRK uses a chevron instead of a triangle for the play icon:

And PornHub has a feature that I haven’t seen anywhere else: a histogram depicting a linear heatmap of what parts of the video are most seen (signaling the “interesting” bits):

…and that’s just for the UI, not the UX. The keyboard controls in each website are different as well. By making all

<video>s look the same, now all<video>-based applications look different.

I think that the history of <video> can be repeated with <mapml>: We might get weird, non-compatible, non-documented geodata formats prone to E-E-E scenarios; we shouldn’t get DRM in the form of basemap provider crypto; and we inevitably shall get inconsistent UX because sooner or later a well-intentioned web designer will blur the line between documents and applications by pushing some company- or domain-specific UX or functionality.

So what is a web map, anyway?

Last week I read Tom MacWright’s «A clean start for the web», and I found it eye-opening. I heartily endorse it, and I suggest you read it too.

The writing tells apart documents and applications. There was a difference between documents (static, reusable pieces of information with clear boundaries) and applications (dynamic, ephemeral, interaction-heavy displays of information). As time has went by, the boundary between those two concepts has been blurred.

This problem is not specific to the web: I’m still befuddled at the fact that Postscript is turing-complete, that PDF files can be scripted, and I still don’t see a reason why interactive slippy maps inside PDFs should be a thing (since I’m a hard-headed nerd who thinks that PDFs should be an exchange format for printing only).

So when it comes to web maps, I’m not sure about where a static, reusable map document ends and where a dynamic, interaction map viewer application starts.

After some thinking, I believe that embeddable web maps as we understand them are applications that display an otherwise opaque document not available through any other means.

Thinking in Leaflet/OpenLayers terms makes the distinction clear: the document is the Leaflet TileLayer or the OpenLayers Source, and the application is Leaflet or OpenLayers itself. But when using some of the other embeddable web maps, the boundary is not clear at all. Take Apple’s MapkitJS as an example: it’s an application that displays underlying documents (the basemap raster/vector tiles), but the underlying documents are not specified, and there’s no documented API to otherwise fetch them. Therefore, it’s alluring (but IMHO incorrect) to think as MapkitJS instances as static, reusable pieces.

Of course, there are operational problems when referring to basemaps as documents, since by now we’re used to think of them as world-wide (long gone are the days when we used to think in terms of map sheets).

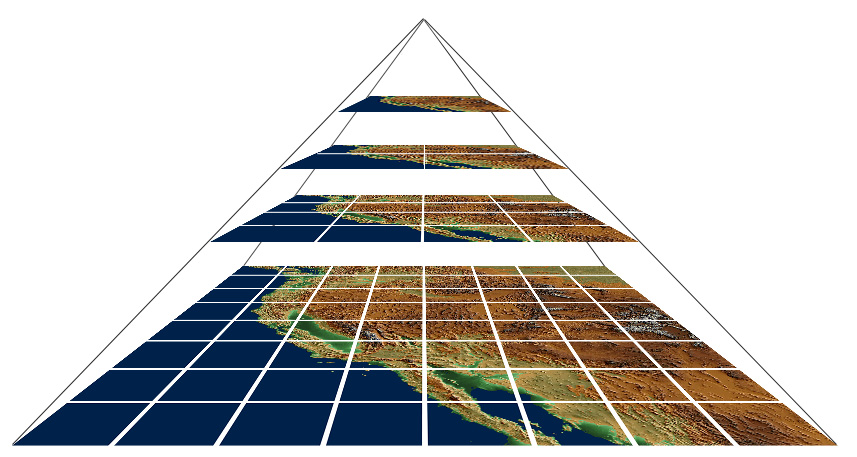

But we do have the tools to specify boundaries for geodata documents, even when they’re insanely big in CompSci terms (the OSM planet dump is ticking in at 53 GiB in binary form, and hi-res raster datasets are TiB upon TiB big). The trick is to think in terms of tile pyramids.

(Image shamelessly lifted from the OSGeo wiki)

Folks from the GIS field (including OGC/OSGeo folks) should be familiar with the concept; folks from the WWW field perhaps not so much. In a nutshell, any big enough dataset can be split in smaller pieces, and each of those smaller pieces can be uniquely addressed. Moreover, we got standards such as OSGeo’s Tiled Map Service and OGC’s Web Map Tiled Service and Cloud-Optimized GeoTIFF, so there’s hardly any need to reinvent the wheel.

With this, I can define a web map application as a way to display a basemap pyramid document together with a vector dataset document (such as one point or a polyline); and as long as the documents are standard, they can be loaded in any other application - including ones with extra accessibility features or gamepad control.

At the risk of repeating myself, I’ll say that the obstacle for this is not technical (we’ve got the formats and tools already), but political (will to provide documents/datasets in interoperable ways and formats).

Next up is Part 5: The Gold, the Moor, and the Sausage Machine